Open rates, bounce rates, cohort analysis, click-through rates, CPC…

Do these sound familiar? I bet they do.

These are all statistical instruments for establishing relationships across metrics and dimensions. These statistical tools and concepts cut across the various analytics dashboards used in email marketing, SEO, PPC, etc.

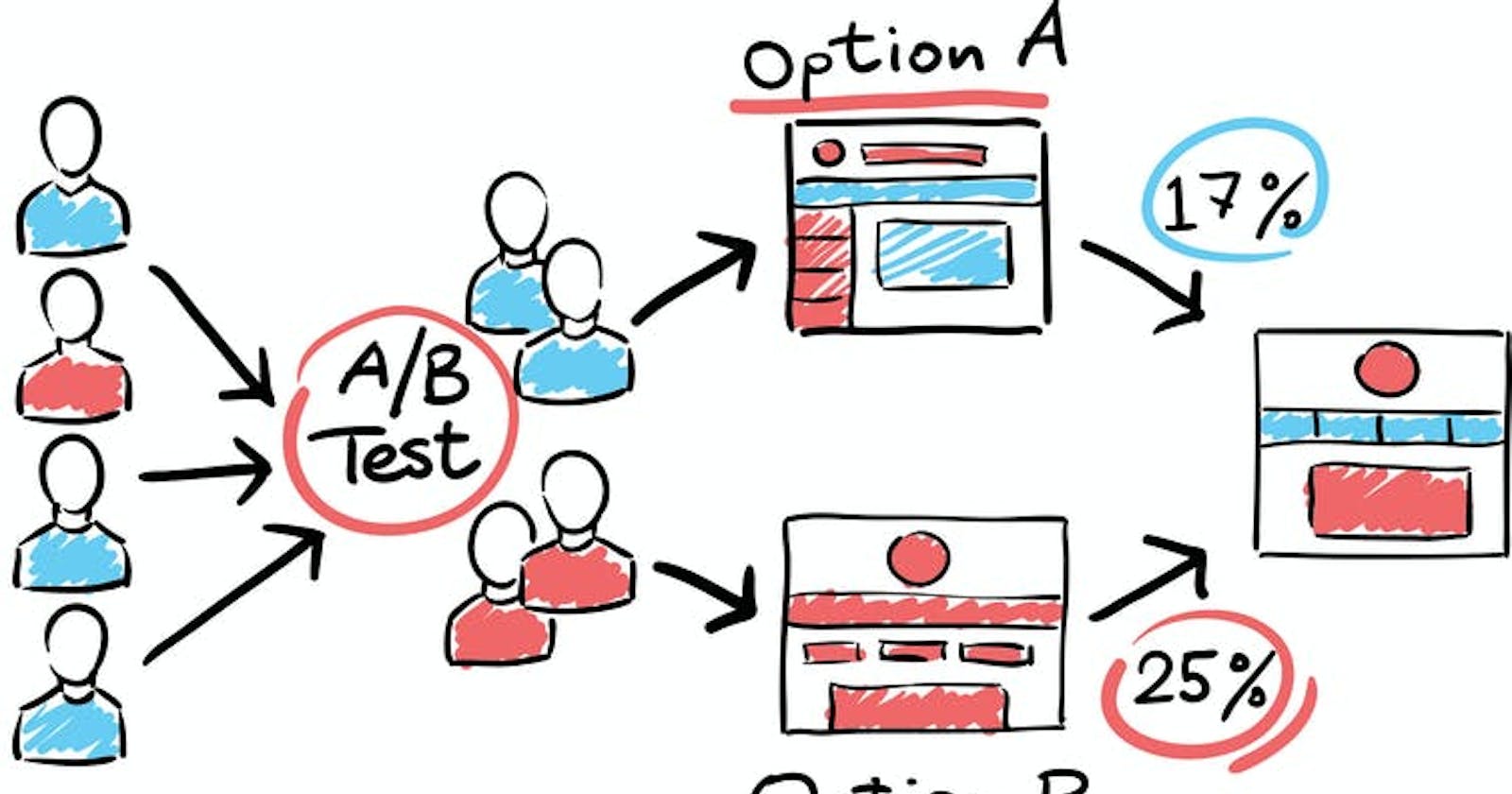

This means that regardless of the digital marketing specialization, there is an underlying language with which all analytics dashboards relay data. This language of statistics must be learned in order to conceptualize and communicate marketing phenomena. Without an understanding of basic statistics, we won’t be able to properly evaluate a/b test results or derive any marketing insights.

This is what brings us to a review of Statistics fundamentals for testing ,

Here's the outline

- Sampling: populations, parameters, and statistics

- Mean, variance, and standard deviation

- Confidence intervals

- Statistical significance and the p-value

- Statistical power

- Sample size and how to calculate it

- Statistics trap 1: regression to the mean and sampling error

- Statistics trap 2: too many variants

- Statistics trap 3: click rates and conversion rate

- Statistics trap 4: Frequentists vs Bayesian test procedures

Sampling: populations, parameters, and statistics

Sampling is central to statistical inquiry because it simplifies the task of reaching conclusions. Before sampling can occur or be applied, certain concepts must first be understood. Some of these concepts are; Populations and parameters

In the context of growth marketing, a population can be considered as all potential users, people, or entities in a group of things that we want to measure.

For example, if we were to determine which shops offered hotter coffee in a location, we would have to compare the temperature of coffee cups in each shop.

In this scenario, there is a true population and a sample population which in turn give rise to true and sampled population parameters.

The true population is the number of coffee cups from each shop, while the sampled population is the number of coffee cups for which temperature measurements are taken.

The true parameter of interest is the mean coffee temperature in each shop. While the sampled parameter of interest is the mean temperature of each cup in our sample.

We won't ever know the true parameter because we can't measure the temperature of every single cup of coffee produced by each shop, so we would have to make do with the sample parameter of interest where the sample is the tested collection of coffee cups from each shop.

So what we’ll do is to use the sample statistics (mean temperature of measuring cups) to make inferences about the population parameters (mean temperature of all coffee cups).

The mean and standard deviation of the true population is represented by Mu and Sigma, while that of the sample population is represented by X-bar and S.

Mean, variance, and standard deviation

In understanding the statistics relevant to marketing experimentation, there are other foundational concepts central to that goal. Some of these are the Mean, Variance, and standard deviation.

If we plot all temperatures for each coffee cup, we would get the bulk of the points aggregated around a certain temperature value which is the mean. This close clustering of parameters that are characteristic to a population, is why the mean is called the "most common measure of central tendency".

What about variance and standard deviations?

Understanding that data can take on different shapes is a good way to conceptualize the outcomes of an a/b test or marketing experiment. This brings us to the concept of variance which measures how spread out the data is. The most common measure of variability in data is the standard deviation. i.e standard deviation is a measure of variance.

How standard deviation is calculated, its relationship to the shape of data, and how it varies with the data explains the need for sample sizes, confidence levels, and confidence intervals.

Variance is calculated by taking the average squared distance from the mean for all data points, while the standard deviation is the square root of the variance.

Confidence intervals

After variance, the next we need to talk about is the confidence interval. Confidence intervals define the probability of finding the value of a parameter within a fixed range. The components of a confidence interval are: Mean, sample size, variability and confidence level

Implications of a confidence interval in a/b testing

- Represents the amount of error allowed in an a/b test

- Estimates the risk of sampling error.

- There shouldn't be an overlap in margins of error between the two variants

- Statistical significance and the p-value

Statistical significance helps us quantity whether a result is likely to be a chance. This is what brings the concept of a p-value into focus. The p-value is the probability of obtaining the difference from a sample comparison if there really isn't a difference between both populations.

Hence it is the probability of obtaining a false positive. The threshold for declaring statistical significance is a p-value that's less than 0.05

1-P-value = confidence interval

Notes on the p-value

- It doesn't tell us the probability of B>A in an a/b test

- It doesn't tell us the probability that we will make a mistake in selecting B over A Statistical power

This concept helps us in weighing the sample size requirement for a test. It is the likelihood that a study will detect an effect when there is an effect to be detected.

Overpowered and underpowered a/b tests exist depending on the sample size is greater or below what is required, based on the statistical power.

NB: When the sample size is too small, the probability of a type II error increases and vice versa

Sample size and how to calculate it

As with every statistical procedure, one of the most common questions is, what sample size do I need?

In A/B tests, the sample size depends on the size of the difference we want to detect if there's a difference to be detected at all. Other factors are the level of confidence required including the power and variability of the data.

And so there are three main ingredients to the calculation, assuming that you hold the power and confidence levels constant

1) So there's the control group's expected conversion rate

2) the minimum relative change in conversions you want to be able to detect

So the Lift and then the confidence levels.

And the inverse of that is how much of a risk of a type one error or false positive do you want to accept?

Statistics traps to avoid

Common statistical traps to avoid will be discussed in an update under four major types which are

- Regression to the mean

- Too many variants

- Click and conversion rates

- Frequentists vs Bayesian test procedures

Conclusion

Statistical understandings are central to a successful growth marketing campaign especially when experimentation ideas are abundant, and the technology to implement them is available. For hands-on practice with some of the concepts discussed in this write-up, you can try your hands on this calculator